1 简介¶

LAMMPS 是大规模原子分子并行计算代码,在原子、分子及介观体系计算中均有重要应用,并行效率高,广泛应用于材料、物理、化学等模拟。

推荐大家使用singularity镜像,方法可参考下方跨节点lammps任务相关介绍

1.1 可用的版本¶

| 版本 | 平台 | 构建方式 | 目标cpu架构 | 目标集群分区规格/节点数 |

|---|---|---|---|---|

| lammps20220817git_broadwell | 源码 | broadwell | xeon E5-2686 v4, 72 cpus/1台 | |

| lammps20220817git_zen | 源码 | zen | epyc 7601, 128 cpus/1 台 | |

| lammps20220817git_zen2 | 源码 | zen2 | epyc 7542, 128 cpus/1 台 | |

| lammps20220817git_zen3 | 源码 | zen3 | ryzen 5950x, 32 cpus/4 台 |

1.2 版本简介¶

以lammps20220817git_broadwell为例,lammps20220817git指软件是由lammps github的release通道的源码于20220817日编译而来,针对intel的broadwell架构cpu优化,如broadwell版本则调用了intel MKL。同理,zen,zen1,zen2,zen3则是指AMD的ryzen架构。所有版本都可混用,但对运行效率有影响,建议使用与cpu架构相对应的lammps预编译版本提交作业。

1.3 编译的二进制执行文件中包含的计算模块

注:部分针对cpu架构添加的优化参数及编译器信息略

-D BUILD_MPI=yes -D BUILD_OMP=yes -D PKG_MOLECULE=yes -D PKG_OPT=yes -D PKG_RIGID=yes -D PKG_MISC=yes -D PKG_KSPACE=yes -D PKG_OPENMP=yes -D PKG_MANYBODY=yes -D PKG_MC=yes -D PKG_REAXFF=yes -D PKG_EXTRA-DUMP=yes -D PKG_KOKKOS=yes -D Kokkos_ENABLE_OPENMP=yes -D WITH_GZIP=yes -D LAMMPS_EXCEPTIONS=yes -D LAMMPS_MEMALIGN=8 -D INTEL_ARCH=cpu -D FFT=MKL1.4 sbatch脚本注解

#!/bin/bash

#SBATCH --partition=Nebula32_zen3 ## 要加入排队的slurm分区,不指定则自动使用Nabula分区

#SBATCH --nodelist=node60,node7,node10 ## 把任务提交到分区中的其中一个机器,不指定则会自动分配分区内的机器

#SBATCH --error="Error.%x" ## slurm 报错日志

#SBATCH --output="Output.%x" ## slurm 输出日志

#SBATCH -N 1 ## 任务申请节点数

#SBATCH --ntasks-per-node=16 ## 单个节点最大mpi进程数目

#SBATCH --cpus-per-task=1 # 每个mpi进程的omp线程上限,超线程已关闭,故设为1(这里的cpus所指的是逻辑核心)

job=${SLURM_JOB_NAME} # 任务名

v_np=${SLURM_NTASKS_PER_NODE:-1} ## 获取进程数

v_t=${SLURM_CPUS_PER_TASK:-1} ## 获取线程数

tmpdir=/tmp/$USER@$SLURM_JOB_ID ## 指定缓存目录为 /tmp/用户名@jobid (例: lym@154)

cp -r $SLURM_SUBMIT_DIR $tmpdir ## 直接将输入文件夹复制到缓存目录

### loging ### 记录slurm作业何时提交?作业ID?作业名称?MPI进程和omp线程?等信息到$SLURM_SUBMIT_DIR/$job.out

echo "Job execution start: $(date)" >> $tmpdir/$job.out

echo "Slurm Job ID is: ${SLURM_JOB_ID}" >> $tmpdir/$job.out

echo "Slurm Job name is: ${SLURM_JOB_NAME}" >> $tmpdir/$job.out

echo "# of MPI processor,v_np is: ${SLURM_NTASKS_PER_NODE:-1}" >> $tmpdir/$job.out

echo "# of openMP thread, v_t is: ${v_t}" >> $tmpdir/$job.out

### set environment ### 设置环境变量/加载软件包

. /mnt/softs/spack/share/spack/setup-env.sh ## 初始化spack

spack load gcc@12.1.0%gcc@9.4.0 arch=linux-ubuntu20.04-zen2 ffmpeg@4.4.1%gcc@9.4.0 arch=linux-ubuntu20.04-zen2 openmpi@4.1.4%gcc@9.4.0 arch=linux-ubuntu20.04-zen2 fftw@3.3.10%gcc@9.4.0 arch=linux-ubuntu20.04-zen2

## spack load gcc@12.1.0%gcc@9.4.0 arch=linux-ubuntu20.04-xxx ffmpeg@4.4.1%gcc@9.4.0 arch=linux-ubuntu20.04-xxx ## openmpi@4.1.4%gcc@9.4.0 arch=linux-ubuntu20.04-xxx

## spack load intel-mkl arch=linux-ubuntu20.04-xxx ### 加载软件包 xxx = broadwell/zen/zen2/zen3

#ulimit -s unlimited # ulimit command sets or reports user process resource limits

#ulimit -l unlimited # 封印解除

export OMP_NUM_THREADS=$v_t ## 设置omp线程数

export OMP_PROC_BIND=false ## omp绑定

export OMP_PLACES=threads ## omp绑定方式

### run ### 运行lammps

cd $tmpdir

mpirun --display-map --use-hwthread-cpus -np $v_np --map-by core /mnt/softs/LAMMPS/lammps20220817git/lammps/build_zen2/lmp -k on t $v_t -sf kk -in $job

#$v_t --cpus-per-proc 2 -bind-to core

## mpi进程绑定到物理cpu,则omp最多只能为2,因为omp只能在同一个进程下

### loging and retrive outputs ### 记录任务结束时间至$SLURM_SUBMIT_DIR/$job.out

echo "Job end: $(date) , retriving files" >> $tmpdir/$job.out

# mv -r /tmp/$SLURM_JOB_ID$USER $SLURM_SUBMIT_DIR ## 取回计算结果到输入文件夹

# 需要注意的是,由于lammps没有缓存文件,所有文件都是输出文件,因此可以对整个文件夹cp

# 具体到不同软件,需软件负责人按需设置

echo "Job end: $(date)" >> $tmpdir/$job.out

############# other comments #############################################################

#Please note that mpirun automatically binds processes as of the start of the v1.8 series. Three binding patterns are used in the absence of any further directives:

#Bind to core:

# when the number of processes is <= 2

#Bind to socket:

# when the number of processes is > 2

#Bind to none:

# when oversubscribed

## If Open MPI is not explicitly told how many slots are available on a node (e.g., if a hostfile is used and the number of slots is not specified for a given node), it will determine a maximum number of slots for that node in one of two ways:

#1. Default behavior

#By default, Open MPI will attempt to discover the number of processor cores on the node, and use that as the number of slots available.

#2. When --use-hwthread-cpus is used

#If --use-hwthread-cpus is specified on the mpirun command line, then Open MPI will attempt to discover the number of hardware threads on the node, and use that as the number of slots #available.

2 提交作业

2.1 准备LAMMPS输入脚本及lammps data

lammps输入脚本编写极其灵活,既可以脚本命令与输入数据相分离,也可以将简单数据直接嵌入至输入脚本,又可以调用bash脚本 (因此学习曲线较为陡峭),此处略过,仅以简单的LJ模型为例。

在用户home目录创建lmptest目录,创建LJ.in文件。

cd ~ && mkdir lmptest && touch lmptest/LJ.in && nano lmptest/LJ.in 写入以下内容:

# 3d Lennard-Jones melt

variable x index 1

variable y index 1

variable z index 1

variable xx equal 20*$x

variable yy equal 20*$y

variable zz equal 20*$z

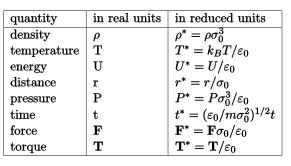

units lj

atom_style atomic

lattice fcc 0.8442

region box block 0 ${xx} 0 ${yy} 0 ${zz}

create_box 1 box

create_atoms 1 box

mass 1 1.0

velocity all create 1.44 87287 loop geom

pair_style lj/cut 2.5

pair_coeff 1 1 1.0 1.0 2.5

neighbor 0.3 bin

neigh_modify delay 0 every 20 check no

fix 1 all nve

run 1000002.2 提交任务

cd ~/lmptest && sbatch -J LJ.in /mnt/softs/Scripts/lammps_xxx.sh其中xxx为目标集群cpu架构 xxx = broadwell/zen/zen2/zen3

As for optimal job configuration, it depends how ‘optimal’ is defined. For optimal time to solution, often it is better to let Slurm decide how to organise the ranks on the nodes because it will then be able to start your job sooner.

#SBATCH --ntasks=64 # Number of MPI ranks

#SBATCH --mem-per-cpu=100mb # Memory per core

For optimal job performance (in case of benchmarks, or cost analysis, etc.) you will need to take switches into accounts as well. (although with 30 nodes you probably have only one switch)

#SBATCH --ntasks=64 # Number of MPI ranks

#SBATCH --exclusive

#SBATCH --switches=1

#SBATCH --mem-per-cpu=100mb # Memory per core

Using --exclusive will make sure your job will not be bothered by other jobs.

3. 跨节点计算任务

#!/bin/bash

#SBATCH --partition=Nebula32_zen3

#SBATCH --nodelist=node4,node5,node6,node7

#SBATCH --error="Error.%x"

#SBATCH --output="Output.%x"

#SBATCH --nodes=4

#SBATCH --ntasks-per-node=16

#SBATCH --cpus-per-task=2

job=${SLURM_JOB_NAME}

v_np=${SLURM_NTASKS_PER_NODE:-1}

v_t=${SLURM_CPUS_PER_TASK:-1}

mkdir -p /home/lym/tmp

tmpdir=/home/lym/tmp/$USER@$SLURM_JOB_ID

cp -r $SLURM_SUBMIT_DIR $tmpdir

### loging ###

echo "Job execution start: $(date)" >> $tmpdir/$job.out

echo "Slurm Job ID is: ${SLURM_JOB_ID}" >> $tmpdir/$job.out

echo "Slurm Job name is: ${SLURM_JOB_NAME}" >> $tmpdir/$job.out

echo "# of MPI processor,v_np is: ${SLURM_NTASKS_PER_NODE:-1}" >> $tmpdir/$job.out

echo "# of openMP thread, v_t is: ${v_t}" >> $tmpdir/$job.out

### run ###

cd $tmpdir

srun --mpi=pmix \

--cpu-bind=cores --cpus-per-task=$v_t \

--export=ALL,OMPI_MCA_btl_tcp_if_include=192.168.33.0/24,OMP_PROC_BIND=True,OMP_PLACES=cores,OMP_NUM_THREADS=$v_t,OMP_STACKSIZE=512m \

singularity exec /mnt/softs/singularity_sifs/lmp_gnu_kokkos_omp_mpi_debain_jammy_stable_23Jun2022.sif lmp -k on t $v_t -sf kk -in $job

### loging and retrive outputs ###

echo "Job end: $(date) , retriving files" >> $tmpdir/$job.out

###### rm not updating slurm logs before mv back outputs #####

rm -rf /home/lym/tmp/$USER@$SLURM_JOB_ID/Error.*

rm -rf /home/lym/tmp/$USER@$SLURM_JOB_ID/Output.*

mv /home/lym/tmp/$USER@$SLURM_JOB_ID/* $SLURM_SUBMIT_DIR

echo "Job end: $(date)" >> $tmpdir/$job.out

/mnt/softs/Scripts/mail.sh 243183540@qq.com

命令解释:

srun --mpi=pmix \ # 选择mpi类型

--cpu-bind=cores \ # 将每个MPI进程绑定到物理cpu

--export=ALL,\ # 引入环境变量

OMPI_MCA_btl_tcp_if_include=192.168.33.0/24,\ # 设置MPI通讯网段

OMP_PROC_BIND=True,\ # OpenMP线程绑定规则

OMP_PLACES=cores,\ # OpenMP线程绑定规则

OMP_NUM_THREADS=$v_t,\ # OpenMP并行的线程数

OMP_STACKSIZE=512m \ # OpenMP STACKSIZE

singularity exec /xxx/lammps_xx.sif lmp -k on t $v_t -sf kk -in $job # 调用singularity镜像中的lammps程序

•计算密集型LAMMPS计算任务 (如使用ReaxFF力场时),跨节点计算的加速效果几乎是线性的

•普通的LJ力场由于计算简单,节点间通讯速度 (频繁的工作交接使得沟通成本高涨) 成了对计算加速的瓶颈

•需要谨慎设置MPI绑定参数和OpenMP线程绑定规则,本例中把MPI线程绑定到了物理cpu,因此OpenMP最多有两个线程 (因为该MPI进程只拥有一个cpu核心,即2个omp线程)

Table 1 使用slurm 运行使用不同MPI程序编译的软件 (lammps为例)

| MPI 实现 | PMI版本 | Slurm使用范例 |

| OpenMPI | –mpi=mpix | srun -N 2 -c 2 –mem=32GB –mpi=pmix lmp -in in.input |

| MPICH2 | –mpi=pmi2 | srun -N 2 -c 2 –mem=32GB –mpi=pmi2 lmp -in in.input |

| Intel MPI | export LMPI_MPI_LIBRARY=/xxx/librlibmpi.so | srun -N 2 -c 2 –mem=32GB lmp -in in.input |

| MVAPICH2 | –mpi=pmi2 | srun -N 2 -c 2 –mem=32GB –mpi=pmi2 lmp -in in.input |